The Prompt Token Counter for OpenAI Models is an essential online tool designed to help users manage their interactions with OpenAI's language models effectively. By accurately counting tokens, this tool ensures that users stay within the token limits set by models like GPT-3.5 and GPT-4, optimizing both performance and cost. Whether you're a developer, researcher, or content creator, understanding and managing token usage is crucial for maximizing the capabilities of AI models. With a user-friendly interface and straightforward functionality, this tool simplifies the process of token counting, making it accessible for everyone.

Prompt Token Counter

Features of the Prompt Token Counter for OpenAI Models

The Prompt Token Counter offers a variety of features that cater to the needs of users working with OpenAI's language models. Here are some of the key features:

-

Real-Time Token Counting: The tool provides instant feedback on the number of tokens in your input prompt, allowing you to adjust your text accordingly before submission.

-

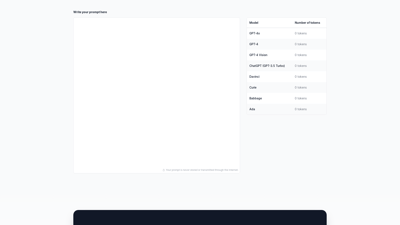

Multiple Model Support: Users can select from various OpenAI models, including GPT-3.5, GPT-4, and others, to see the specific token limits and usage for each model.

-

Cost Management: By keeping track of token usage, users can effectively manage costs associated with using OpenAI's models, avoiding unexpected charges.

-

User-Friendly Interface: The intuitive design of the tool makes it easy for anyone, regardless of technical expertise, to navigate and utilize its features.

-

Prompt Optimization Tips: The tool offers suggestions on how to refine prompts to ensure they are concise and effective, enhancing the quality of the generated responses.

-

Documentation and Support: Comprehensive documentation is available to guide users through the features and functionalities of the tool, ensuring they can make the most of their experience.

-

Secure and Private: The tool ensures that user prompts are never stored or transmitted over the internet, maintaining privacy and security during usage.

-

Versatile Applications: Whether for academic research, software development, or content creation, the token counter is versatile enough to meet various user needs.

Frequently Asked Questions about the Prompt Token Counter for OpenAI Models

What is a token?

A token is the smallest unit of text processed by language models. It can be a word, character, or subword, depending on how the text is segmented. Understanding tokens is crucial for managing interactions with AI models effectively.

Why is token counting important?

Token counting is essential to ensure that your input and output stay within the model's token limits. This prevents requests from being rejected and helps manage costs associated with using language models.

How do I count tokens using this tool?

Simply input your text into the tool, select the desired OpenAI model, and the tool will automatically count the tokens in real-time, providing you with instant feedback.

Is my data secure when using this tool?

Yes, your prompts are never stored or transmitted over the internet, ensuring your data remains private and secure during use.

Can I use this tool for different OpenAI models?

Absolutely! The Prompt Token Counter supports multiple OpenAI models, allowing you to choose the one you are working with and see the specific token limits for that model.